A new Maildrop

Modernizing a monolith that handles hundreds of thousands of messages per day.

Maildrop is a service that I started in 2013 as an experiment for new antispam filters for Heluna. It was meant to be a playground, a place where I could try new ideas and new technology that would ultimately benefit Heluna's clients. I had originally envisioned it as being very low-volume, because while Mailinator (the inspiration for Maildrop) had a huge amount of traffic, I wasn't planning on Maildrop being anywhere near as successful.

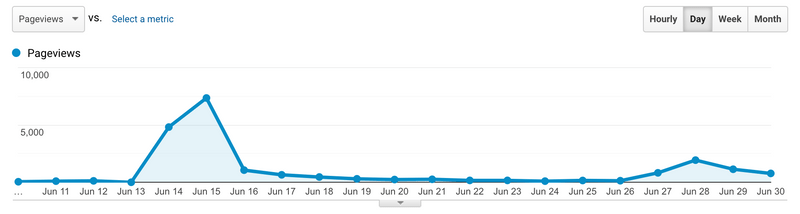

The original submission to Hacker News was late Friday night / early Saturday morning, and I wasn't expecting any traffic at all. You can guess what happened - the post stayed at the top of HN for most of that weekend, and got a ton of support pretty much instantly.

After the initial surge, things died down, but that post to HN created network effects - more tech blogs and social media started putting out messages about Maildrop, to the point where traffic consistently gets thousands of users per day and a quarter of a million messages per day. Maildrop has aged very well, but it was time to make it a more modern application.

Why do a complete rewrite?

Over the years, I have had the uncanny knack of getting paged for server issues when I'm on vacation, and Maildrop was no different. As any web application tends to do, certain components would fail from time to time, neccessitating some sort of hands-on maintenance. Logs growing out of control to a tipping point where they couldn't be rotated. JVM out of memory issues. Redis catching that one cosmic ray that caused it to core dump. Whatever random issues.

On my recent cruise, even though no production issues popped up, I recognized that I was carrying that bit of anxiety in the back of my head. Maildrop doesn't have all of the support systems in place like Heluna does, and so the idea that a relatively throwaway service would go offline weighed on me in the Mayan jungles of Mexico.

I've been working the past few years in environments where application downtime is an extreme rarity; embracing AWS (or Google or Azure if you like) allows you to offload entire services to your cloud provider, and not have to worry as much about service uptime.

Additionally, the Haraka framework intrigued me with how many messages it can process for large enterprises, so I brought Maildrop back to its roots as a playground for bringing a high-traffic mail service into the modern application environment.

The original Maildrop architecture

If you browse the old Maildrop repository on Github you can see the simple architecture for the service.

- One JVM running at 1GB max RAM for processing incoming email messages.

- One JVM running at 1GB max RAM for the website.

- Redis running at 512MB RAM to store emails.

- All running on one EC2 t2.small instance.

You can already see the shortcomings here - there are a few single points of failure here, all of which could in theory be taken care of in a more high-availability environment, but I wasn't planning on spending a lot of time on infrastructure, monitoring, and the like back in 2013.

The JVMs could occasionally restart themselves, and using a supervisor script to keep Redis running would work, but all of that is moot when your EC2 hypervisor just decides to stop - and everyone who runs on more bare-bones EC2 can testify to that happening more often than you think.

While the Maildrop architecture worked really well in 2013, as of 2019 I'm no longer interested in running yum update on an ongoing basis and having to periodically check into the AWS console to manually restart an instance after getting a page from a monitoring service or Cloudwatch.

How serverless can we be?

Before getting into what the new Maildrop architecture looks like, a few caveats.

First: A large amount of antispam efforts depend upon knowing information about the mail server connecting to you. IP address, TCP fingerprinting, location, etc.

Second: SMTP is a very stateful protocol. It behaves as much more of a conversation than HTTP does, and while there has been a lot of research around speeding up HTTP, QUIC, HTTP/2, the underlying SMTP protocol has stayed pretty unchanged. You can't run SMTP over a Lambda at all, since SMTP (and Lambda) just isn't made for that.

Third: The PROXY protocol is a terrible hack and its support is shaky at best. SMTP is very hard to load balance well, which is why multiple MX records are a real thing.

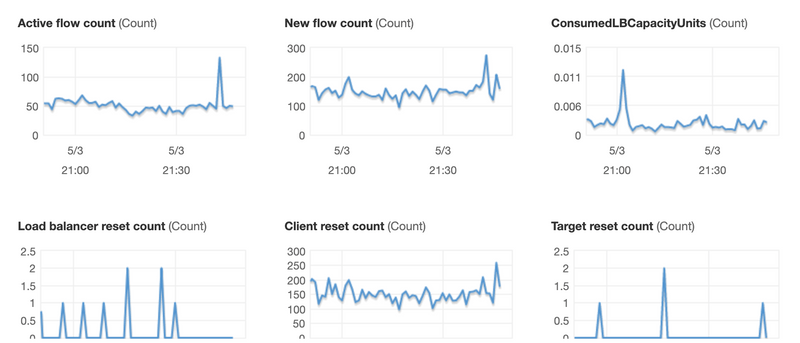

Having said those three caveats, there's still the opportunity to be relatively serverless here. When Amazon announced network load balancers with the ability to balance any port, and maintain the source IP connection to the underlying service, that opened up a world of possibilities, including being able to safely load balance SMTP.

So that's what the new Maildrop does for high availability. Note that the price to load balance 250,000 email messages per day comes to about $20 per month and most of that price is the hourly cost of the load balancer. Handling the SMTP traffic itself costs around $5 per month.

The new Maildrop architecture

Since having an inexpensive network load balancer to route client connections opens us up to a much more elastic architecture, I decided to go all out. Maildrop now uses:

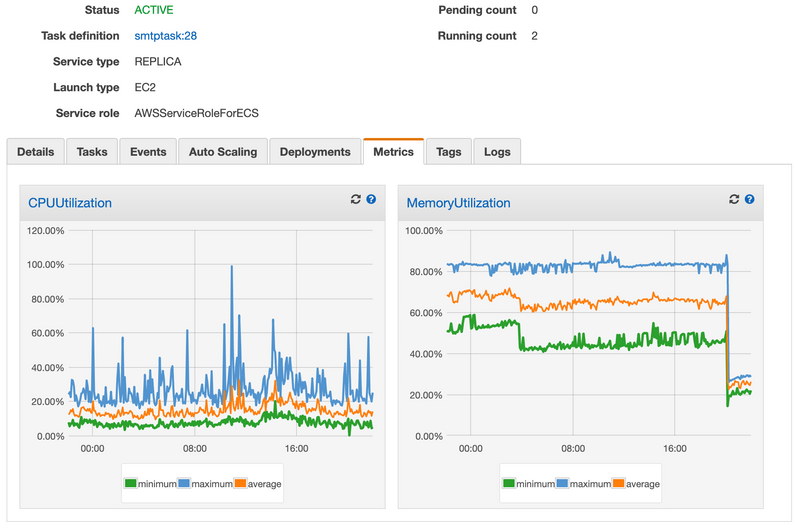

- A Docker container deployed to ECS, with a customized Haraka and instance of bind for DNS.

- Supervisord inside the containers to keep the applications alive and kill the container if it can't restart them.

- An autoscaling policy to make sure Maildrop is always processing messages with multiple containers always running and the ability to grow (and shrink!) based on CPU or RAM usage.

- AWS Elasticache for Redis to manage the email storage. I decided to upgrade from 512MB to a cache.t2.small with 1.5GB of available space.

- API Gateway and Lambda for web API calls.

- S3 for the website, running as a React app.

- Cloudflare for a CDN and DNS (though using Cloudfront would have worked here also).

While this architecture costs a little more than a single t2.small node, it doesn't cost much more and it is worth every penny to let Amazon take care of managing the infrastructure here. The autoscaling policy lets Maildrop add more containers dynamically when it needs, and drop them back down to a base level when the surge of emails subsides. It is very hard to overwhelm Maildrop with messages and deploying new versions of the service can be done automatically without any downtime. That's worth the extra cost.

Using Haraka to process email

Haraka is an SMTP server written in Javascript -- yes, really! -- that has matured quite a bit over the past year. The best part of Haraka is how extensible it is; writing a plugin for Haraka can be done very quickly, and will run at any point during the SMTP conversation when you choose. It is robust, and works surprisingly well. It uses streams of data, it's very CPU and memory friendly, and it is easy to run.

It is not, however, easy to run in a container.

Haraka is heavily dependent upon text configuration files in various formats, which if you're running on an EC2 instance or similar is no problem, but publishing a container to Docker hub makes text file configuration a pain.

I spent a good amount of time writing startup scripts that read in environment variables, write out the correct configuration files, and then finally start Haraka. Here's a sample of what the script is doing:

sed "/^stats_redis_host=/s/=.*/=${HOST}:${PORT}/" src/config/dnsbl.ini > tmpfile && mv tmpfile src/config/dnsbl.ini

sed "/^host=/s/=.*/=${HOST}/" src/config/greylist.ini > tmpfile && mv tmpfile src/config/greylist.ini

sed "/^port=/s/=.*/=${PORT}/" src/config/greylist.ini > tmpfile && mv tmpfile src/config/greylist.ini

sed "/^host=/s/=.*/=${HOST}/" src/config/redis.ini > tmpfile && mv tmpfile src/config/redis.ini

sed "/^port=/s/=.*/=${PORT}/" src/config/redis.ini > tmpfile && mv tmpfile src/config/redis.ini

sed "/^level=/s/=.*/=${LOG}/" src/config/log.ini > tmpfile && mv tmpfile src/config/log.ini

sed "/^altinbox=/s/=.*/=${ALTINBOX_MOD}/" src/config/altinbox.ini > tmpfile && mv tmpfile src/config/altinbox.ini(If you'd like to submit a pull request for Haraka to read in process.env values, that would make Maildrop's life a lot easier.)

I wrote a few plugins for Haraka, one to stream valid messages to Redis, and there are a couple of other unpublished plugins for antispam filtering (using Heluna), and finally a plugin for reporting, so Heluna customers can take advantage of the work that Maildrop is doing.

Next, the container has a stripped-down version of bind that caches DNS requests, so Maildrop doesn't overwhelm any DNSBL hosts. Last, it has an instance of supervisord with a custom configuration to kill the container if either Haraka or bind can't be restarted. (This lets ECS notice that the container is dead, and it will automatically restart a new container as necessary)

The cost of two instances running at all times with room to spare? $19 per month. (At some point in the near future I'll write up how democratizing this is; this would have been thousands of dollars in the early days of the web.)

Lambda functions for an API

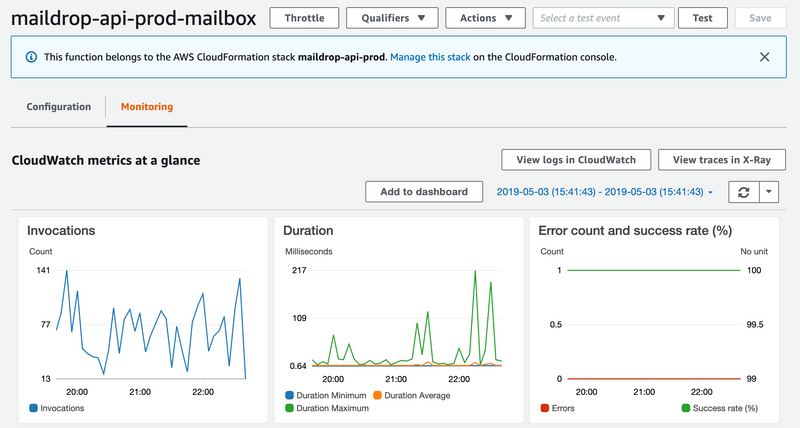

I've spoken about Lambda before, but using API gateway and Lambda to access the Elasticache instance has been a no-brainer here. Cold start time of Lambda functions inside a VPC is still a pain; luckily, Maildrop has that sweet spot of traffic which means it's almost never starting a fresh Lambda from nothing. The API itself is rate limited, since one of the issues that Maildrop would run into is rogue bash scripts out there trying to curl a mailbox a million times in a minute.

I cannot stress enough how freeing Lambda has been. Knowing that you have application code that will run no matter what is a breath of fresh air.

Here's the Lambda for retrieving a mailbox in its entirety. Note that most of the business logic is out of the function, to make unit testing easier.

export async function listHandler(event: APIGatewayProxyEvent): Promise<APIGatewayProxyResult> {

const ip = "" + event.requestContext.identity.sourceIp;

const mailbox = (event.pathParameters || {})["name"];

return ratelimit(ip, client).then(() => {

console.log(`client ${ip} requesting mailbox ${mailbox}`);

return getInbox(mailbox);

}).then((results) => {

return {

statusCode: 200,

headers: {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Credentials': true,

},

body: JSON.stringify({

altinbox: encryptMailbox(mailbox),

messages: results

})

};

}, (reason) => {

console.log(`error for ${ip} : ${reason}`);

return {

statusCode: 500,

headers: {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Credentials': true,

},

body: JSON.stringify({error: reason})

};

});

}The absolutely amazing thing about Lambda is that:

- I don't have to worry about application servers.

- I don't have to worry about sessions.

- I don't have to worry about scaling.

- I don't have to worry about any of that excess garbage that traditional applications have to deal with.

In short, having a pipeline run "npm run compile && npm run deploy" to ship a new version of your API is so immensely worth the tradeoffs that Lambda brings to the table.

Total cost of API gateway and Lambda functions? $9 per month and most of that is Amazon's massive margins on API gateway. (Seriously Amazon, $3.50 per million requests is complete highway robbery.)

S3 for hosting the web client

I've already spoken about what S3 has done for the web. Storage and http service of static assets of any size at any time with the SLA guarantee that Amazon (or Google or Azure) has, is transformational.

Maildrop is written as a React application. With webpack and svg icons and using flexbox for layout, the total bundle size is extremely small - roughly 60k to serve the entire site. The web fonts from Google are larger than the application code! I'm extremely proud of how quickly Maildrop performs on the client side. It was fast before, but was almost entirely rendered on the server, which given that it's 2019, we don't need to do anymore.

Total cost of hosting Maildrop on S3? 28 cents per month.

Cloudflare for DNS and CDN

Similarly, I've talked about how using Cloudflare has helped every single application that I've created. Fast DNS responses are a competitive advantage, and Cloudflare's CDN lets me cache Maildrop's assets for no extra cost. The application absolutely loads faster because of Cloudflare.

Could I use Cloudfront here? Sure, and in fact I'm using Cloudfront for the Lambda API, but serving up static assets through Cloudflare is just easy.

Switching from Github to Gitlab

While Gitlab is less visible for open source projects, the automated pipelines, build process, and support are worth the tradeoff. On the old Maildrop, to run a build, I'd have to package together the two applications with sbt, collect the subsequent zip files, ship them to the EC2 instance, unzip them, and restart the two services.

Automating that was a serious pain, and while I could have written a CloudFormation template, sent the zip to S3, etc, I wasn't doing enough deploys to make that worthwhile. I had to make a change to Maildrop in 2016, and it took me a while to remember that I needed a very specific version of sbt to deploy the site.

Switching to Gitlab for the repository lets me offload all of the build process. Now, I have a customized pipeline that only runs steps when necessary. For example, Maildrop doesn't need to redeploy its Lambda functions if a CSS file changes. Similarly, the web client doesn't need to be rebuilt if we're only making a change to the Haraka code.

This has also been a very freeing process. I can check out the repository, make a quick change, test it locally, then commit the change and have the site built automatically, deployed automatically, and I don't have to touch anything to make that work. Between Gitlab and Amazon, all of the mundane details of deploying software are taken care of for me.

Total cost of automated builds and deploys? Zero.

Conclusions, and an invitation

Thanks for reading about the new Maildrop! What I learned rewriting Maildrop, over the course of about a sprint and a half, is that the JVM is becoming ever more specialized - a solid Docker container, with a node application, can handle a good percentage of traffic as a JVM service. Add in the ability to horizontally scale very easily, and you have a recipe for Javascript (and Typescript - more on that later) to replace a lot of traditional JVM-based services.

I learned that spinning up all of the infrastructure via Terraform makes the entire process of rolling out a new application much easier. Where previously I'd worry about clicking my way through the console, wondering if I'd gotten all of the flags right for ECS or the like, now a small amount of code and I have an environment that I can deploy to whenever I like.

I also learned that for only a small cost increase (less than $25 extra per month) you can buy a huge amount of peace of mind. Where I used to have that nagging feeling in the back of my head that I'd get a page about something at Maildrop failing, now I have complete confidence in my architecture being resilient and fault tolerant.

In short, I can't wait to go on another cruise, and that's worth every penny of additional AWS cost.

To wrap up, do you want to get involved with a project that has a good mix of services and new technology? I'd like to invite you to join the Maildrop project! It's a great stable service that has plenty of room to grow, plenty of features to add, and can be a great playground for you.